Now open source large language models(LLMs) are developing rapidly. Although they are not as good as the paid commercial LLMs, some open source LLMs are sufficient to a certain extent in the scenarios of automatic summarization, writing article reviews and other auxiliary reading of papers. And they are completely free forever. All you need is your personal computer and sufficient power supply. Now PapersGPT has supported the seamless running local LLMs in Zotero, whether Windows or Mac platform, it can be easily run.

When you install and start PapersGPT(at least v0.2.0) for the first time, The system will take some time to automatically initialize the dependent libraries and installation packages required for running local LLMs. Please ensure that the network is good and can connect to GitHub and Huggingface. This process runs automatically in the background, and users do not need to worry about any tedious manual environment configuration and installation. In some system environments on Windows, the firewall may prompt that there are risks. Please grant relevant permissions to ensure the smooth progress of the installation process.

When the environment of running LLMs is initialized, the Local LLM option will appear in PapersGPT, and it will be configured according to the local machine environment, with built-in open source LLMs of matching sizes. Currently supported SOTA LLMs include Gemma 3, Phi 4, Phi 4 Mini Reasoning, Qwen3, DeepSeek Distill Qwen3, llama 3.2 and Mistral 3.1, etc.

After selecting a specific model, such as gemma 3 4b, the model will be automatically downloaded from Huggingface to the local computer. Since LLMs are generally large, they usually take some time to download. The download progress is based on the display on PapersGPT. After the model is downloaded, the background will automatically load and start the local LLM inference service.

Chat with local LLMs when reading papers. You can read a single paper or multiple papers together. For example, you can generate a literature review based on multiple related papers in Zotero. It is very convenient and easy to use.

The related agents of papersgpt may be mistakenly defined as a Trojan or virus by Microsoft Defender or other anti-virus software.

In this case, you may see some abnormal phenomenon.

For example:

"Local LLM" item in the left side of PapersGPT does not appear.

Local model can't be downloaded to your computer.

Local model can't provide chatting service for you.

So if you want to use the Local LLMs on your Windows, please allow the safe papersgpt agents to run on your device in Microsoft Defender or anti-virus software.

When chat pdf with local LLMs make sure your computer is not working in low power mode, power save mode or such kinds of mode. That's because chatting PDFs with local LLMs need a lot of computation by the GPU ,the mode like power save will affact the performance of the computation and make PapersGPT reply slow.

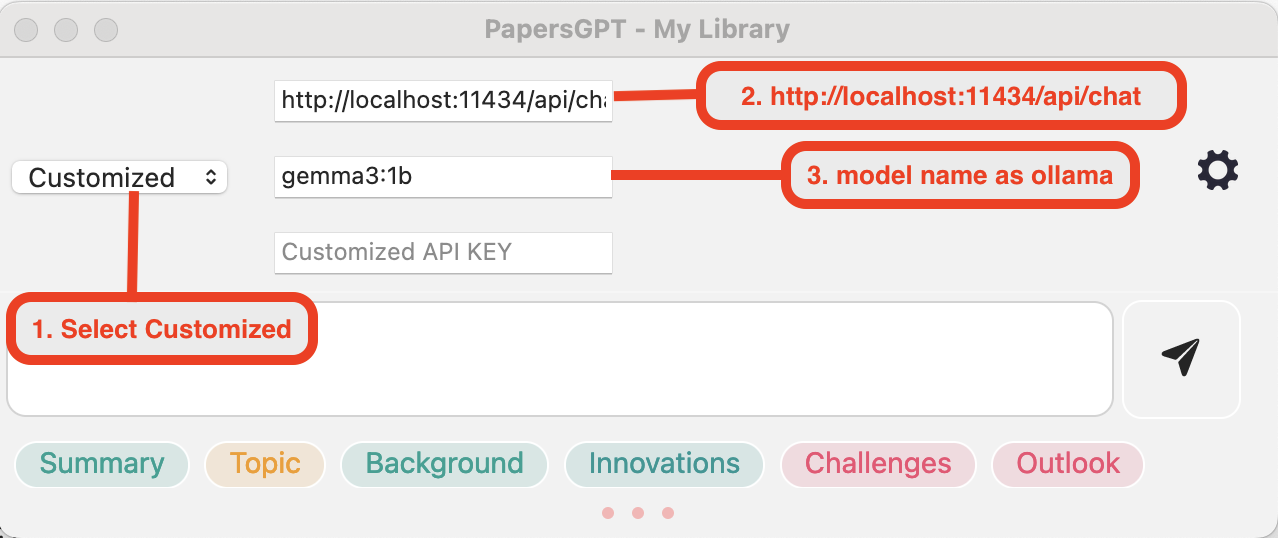

If you are used to using Ollama APP to start the local large language model service, now you only need to select the Customized option in PapersGPT, then set http://localhost:11434/api/chat in the API URL, the model name to be filled in PapersGPT is the same as Ollama, and nothing need to fill in the API KEY.